Deepfakes, a portmanteau of “deep learning” and “fake,” refer to the use of artificial intelligence to create highly convincing but entirely fabricated audio, video, or text content. While these advancements have potential applications in various fields, such as entertainment and digital art, they have also raised significant concerns due to their malicious use in fraudulent activities, privacy violations, and identity theft.

Safeguarding Against Deepfake Fraud

Addressing the growing threat of deepfake fraud necessitates a pragmatic strategy, and incorporating a VPN for PC becomes crucial in reinforcing cybersecurity defenses. A 2022 survey of 125 cybersecurity and incident response professionals highlighted in the VMWare Global Incident Response Threat Report revealed that 66 percent experienced a security incident involving deepfake use in the past 12 months.

This marks a notable 13 percent increase compared to the previous year, underscoring the escalating risk. By deploying a VPN on your Windows system, you establish a concrete defense mechanism, encrypting data and creating a secure online connection.

This not only safeguards your online activities but also plays a pivotal role in mitigating the heightened risks associated with the growing prevalence of deceptive videos or audio recordings. The technical effectiveness of a Windows VPN in preventing unauthorized access aligns with the imperative need to counter the evolving landscape of deepfake fraud.

Cybercriminals often use AI to impersonate someone convincingly, using stolen personal information to commit various types of fraud, such as opening fraudulent bank accounts, obtaining loans, or making unauthorized transactions. This not only puts the individual’s finances at risk but also damages their credit history and reputation.

Besides VPNs, password management is essential in safeguarding personal information online. Password managers not only help users generate and store complex, unique passwords for different accounts but also offer additional layers of security, like two-factor authentication.

The High Stakes of Deepfake Deception in Cryptocurrency Scams

Scammers can also manipulate videos to impersonate influential figures, like business leaders or celebrities, promoting fake investment opportunities in cryptocurrency. These deceitful tactics can lure unsuspecting victims into investing their hard-earned money, resulting in substantial financial losses.

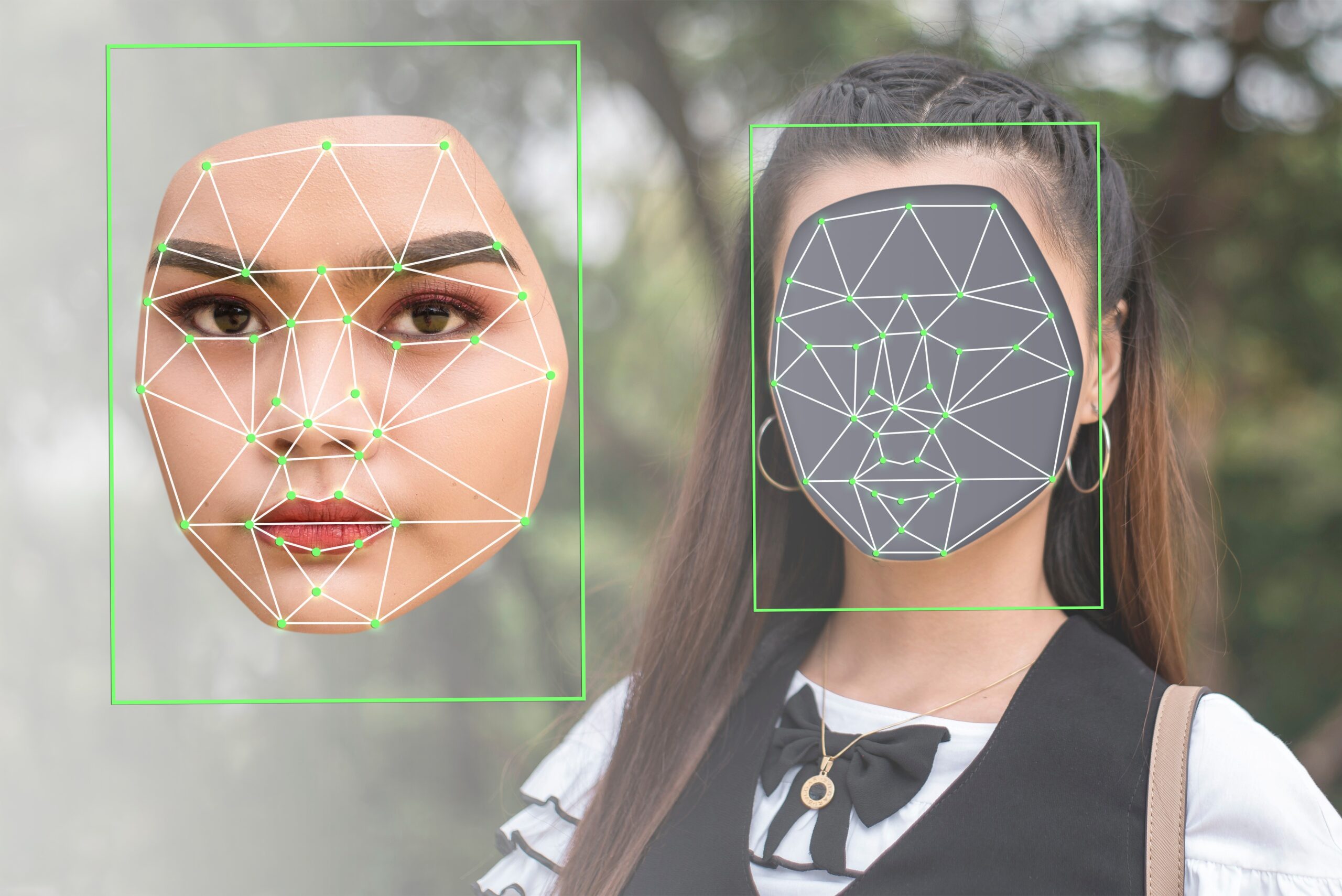

By using machine learning algorithms to synthesize facial features and voices, malicious actors can create fabricated content that appears shockingly realistic. These fabricated materials can be employed to create compromising or defamatory videos, which can be weaponized to tarnish an individual’s reputation or harass them.

Here are some specific examples of deepfake-related issues

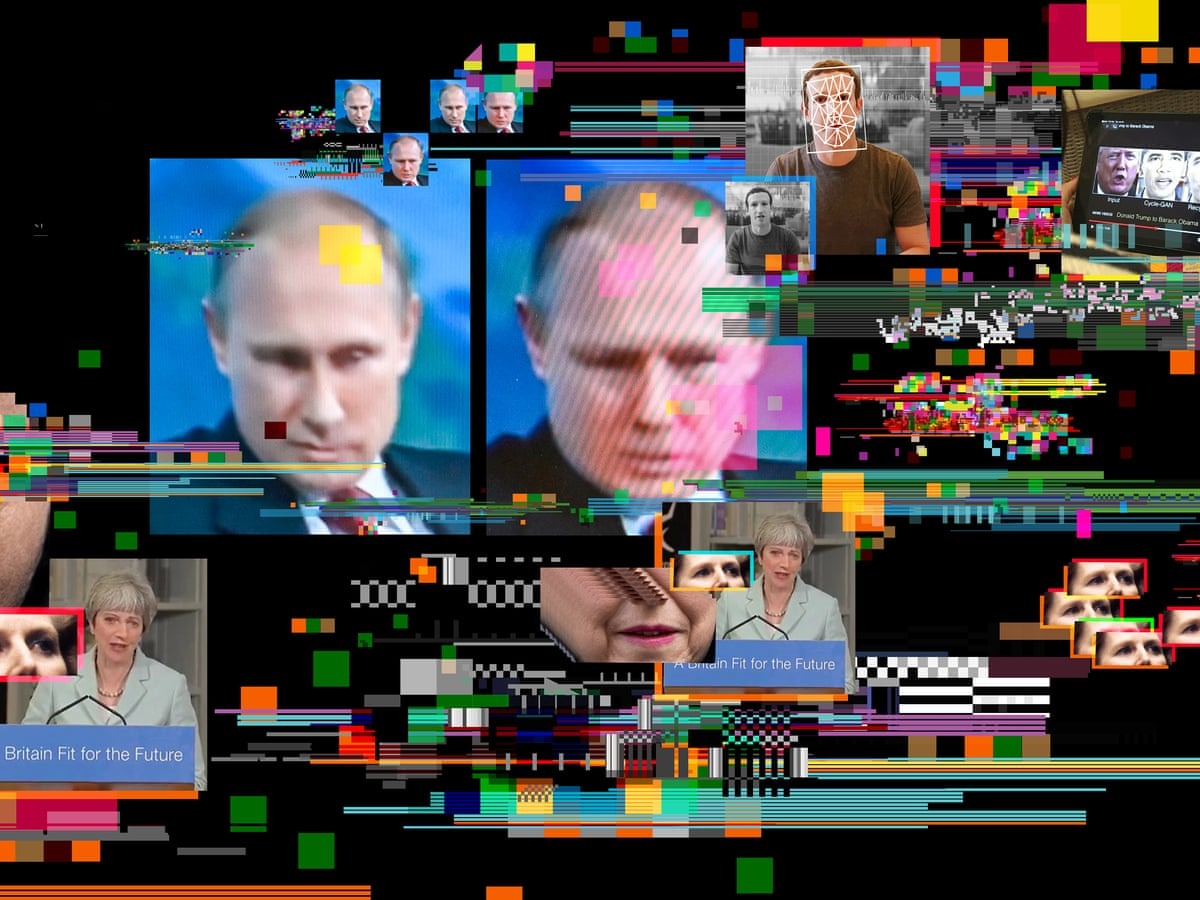

Misinformation and Fake News

In 2018, BuzzFeed and actor Jordan Peele collaborated to create a deepfake video of President Barack Obama. They actually intended to raise awareness about the potential dangers of deepfake technology.

It was part of a larger project called “Deepfake PSA,” where Peele used his impersonation skills to create a video that showed the realistic manipulation of facial expressions and voice to make it seem like Obama was saying things he never actually said.

Revenge Porn

Numerous cases have been reported where individuals have used deepfake technology to create explicit videos or images featuring someone’s likeness without their consent. This is often used for revenge or harassment, causing significant harm to the victims.

Voice-Based Scams

Criminals have used deepfake voice technology to impersonate a victim’s family member or trusted authority figure, tricking them into revealing sensitive information or transferring money.

Deterioration of Trust

The widespread discussion of deepfakes has led to a general sense of distrust regarding the authenticity of digital content, particularly online videos. This makes it increasingly difficult for the public to differentiate between real and manipulated media.

Deepfake Pornography

Celebrities like Scarlett Johannson have been targeted by deepfake pornography creators, who superimpose their faces onto explicit content. This not only invades the privacy of these individuals but also poses reputational risks.

Deepfake pornography can also have severe consequences on their mental well-being. The invasion of privacy and the creation of explicit content with manipulated faces can lead to emotional distress and discomfort for the individuals targeted.

Inadequate Regulation

The lack of robust legal and regulatory frameworks has allowed deepfake technology to proliferate. In some cases, it’s challenging to take legal action against those responsible for creating or disseminating deepfakes.

Regulatory Challenges

The rise of deepfake technology has left lawmakers and authorities grappling with how to regulate and combat its misuse. Unlike traditional forms of fraud or harassment, deepfakes often blur the lines between reality and fabrication, making it difficult to identify and prosecute offenders.

To address these concerns, some governments have introduced legislation to tackle deepfake-related crimes. For instance, in the United States, the Malicious Deep Fake Prohibition Act was introduced to criminalize the creation and dissemination of deepfakes with malicious intent. The act aims to protect individuals from the harm caused by fraudulent, maliciously generated content.

Despite these efforts, regulating deepfake technology remains a complex challenge. The technology itself is advancing rapidly, making it difficult for laws and regulations to keep up. Not to mention, distinguishing between legitimate use and malicious intent can be a gray area, making it essential to strike a balance between safeguarding free expression and preventing harmful misuse.

Potential Implications

The implications of deepfake technology extend beyond individual victimization and financial losses. It has the potential to disrupt public trust, cause misinformation on a massive scale, and impact political stability. The use of deepfakes to manipulate public opinion or to impersonate political figures for nefarious purposes is a growing concern in democratic societies.

To mitigate these risks, technology companies, government agencies, and researchers are continually developing detection methods and tools to identify deepfake content. These efforts aim to safeguard the integrity of digital content and protect individuals from the negative consequences of maliciously created deepfakes.

Ethical and Societal Implications of Deepfake Technology

Moreover, the entertainment industry has been significantly impacted by the rise of deepfake technology. While technology has opened new creative possibilities for filmmakers and content creators, it has also raised ethical concerns. The use of deepfakes to replace actors or recreate historical figures in movies and TV shows has raised questions about consent, authenticity, and the potential for manipulation.

In the world of journalism, deepfakes present a challenge as well. The ability to create realistic videos and audio recordings of public figures has the potential to spread misinformation and fake news rapidly. This threatens the credibility of the media and makes it more difficult for the public to discern fact from fiction.

Also, the development of deepfake technology is not limited to well-funded malicious actors. As the tools for creating deepfakes become more accessible, there is a growing concern that individuals with ill intentions can easily generate fake content for personal gain or revenge.

In conclusion, while deepfake technology holds promise in various fields, its misuse in fraudulent activities, privacy violations, and identity theft presents a growing challenge. Effective regulation and detection methods are essential to curb the darker aspects of deepfake technology, ensuring that it serves the interests of society rather than endangering it.

As technology evolves, it is crucial for all stakeholders to remain vigilant and proactive in addressing the threats posed by deepfakes. Finding a balance between the potential benefits and risks of this technology will be an ongoing and complex endeavor that requires the collaboration of governments, tech companies, and the public.